- Definition of Artificial Intelligence (AI)

- Overview of Key Aspects of AI

- What is Intelligence?

- Components of Intelligence

- Methods and Goals in AI

- Artificial General Intelligence (AGI), Applied AI, and Cognitive Simulation

- The Origins of AI

- Early Developments in AI

- Knowledge-Based Systems

- The Rise of Connectionism

- New Foundations in AI

- AI in the 21st Century

- Risks and Challenges

- FAQs

Definition of Artificial Intelligence (AI)

Artificial Intelligence (AI) refers to the simulation of human intelligence processes by machines, particularly computer systems. It involves the development of algorithms and models that enable machines to perform tasks that typically require human intelligence, such as learning, reasoning, problem-solving, perception, and language understanding.

Overview of Key Aspects of AI

AI encompasses a wide range of technologies and applications that aim to replicate or augment human cognitive abilities. From machine learning algorithms to expert systems, Artificial Intelligence is utilized in various fields to automate tasks, make predictions, and enhance decision-making processes. Understanding the fundamental aspects of intelligence is crucial to developing effective AI systems.

What is Intelligence?

Defining Intelligence

Intelligence can be defined as the ability to acquire and apply knowledge, solve problems, adapt to new situations, and learn from experience. It involves the capacity to reason, plan, understand complex ideas, and communicate effectively. In the context of AI, intelligence refers to the ability of machines to perform cognitive tasks that typically require human intelligence.

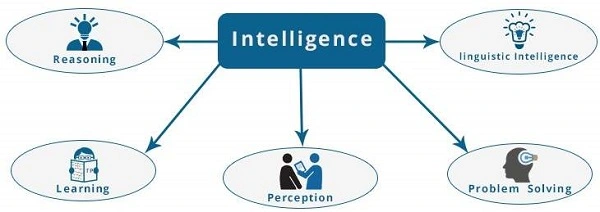

Components of Intelligence

Learning

Learning is the process of acquiring new knowledge or skills. In the context of Artificial Intelligence, machine learning algorithms enable systems to learn from data and improve their performance on tasks over time, without being explicitly programmed. For example, a machine learning model trained on a large dataset of images can learn to recognize and classify different objects, such as cars, animals, or buildings.

Reasoning

Reasoning is the ability to think logically and make inferences based on available information. Artificial Intelligence systems use reasoning mechanisms to process data, make decisions, and solve problems. For instance, a chatbot powered by natural language processing can use reasoning to understand the user’s intent, retrieve relevant information, and provide an appropriate response.

Problem Solving

Problem-solving is the process of finding solutions to complex or challenging issues. AI algorithms are designed to analyze problems, identify patterns, and generate solutions. A great example is the use of AI in chess or Go, where computer programs can analyze millions of possible moves and strategies to outperform even the most skilled human players.

Perception

Perception is the ability to interpret sensory information and understand the environment. In Artificial Intelligence, perception involves tasks like image recognition, speech understanding, and sensor data processing. For example, self-driving cars use computer vision and sensor fusion to perceive their surroundings, detect obstacles, and navigate safely.

Language

Language is a fundamental aspect of human communication and cognition. Artificial Intelligence systems are developed to understand, generate, and process natural language, enabling interactions with users through speech recognition, language translation, and text analysis. Virtual assistants like Siri or Alexa use natural language processing to interpret user commands and provide helpful responses.

By understanding these key components of intelligence, we can better appreciate the capabilities and potential of AI systems to mimic and enhance human cognitive abilities across a wide range of applications.

Methods and Goals in AI

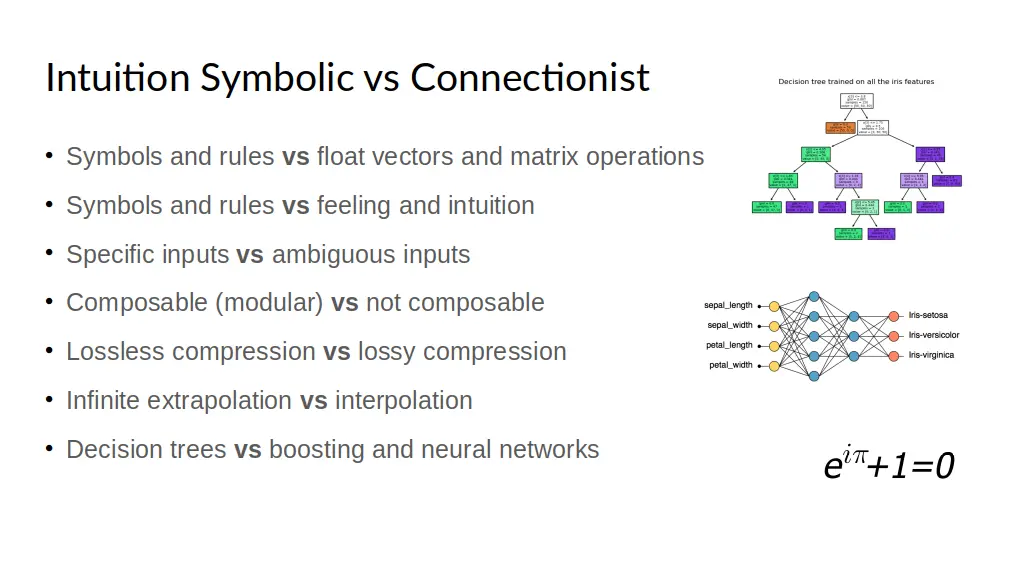

Symbolic vs. Connectionist Approaches

In the field of Artificial Intelligence (AI), research and development have been guided by two main approaches: symbolic AI and connectionist AI.

Symbolic Artificial Intelligence

Symbolic Artificial Intelligence, also known as classical Artificial Intelligence, focuses on manipulating symbols according to predefined rules. This approach involves representing knowledge in a structured format and using logic-based reasoning to derive conclusions. Expert systems and knowledge-based systems are examples of applications that follow the symbolic Artificial Intelligence approach.

Connectionist Artificial Intelligence

Connectionist Artificial Intelligence, inspired by the structure and function of the human brain, involves building artificial neural networks that can learn from data through interconnected nodes. Deep learning, a subset of connectionist AI, has shown remarkable success in tasks like image recognition and natural language processing.

Artificial General Intelligence (AGI), Applied AI, and Cognitive Simulation

Artificial General Intelligence (AGI)

AGI refers to AI systems that can perform any intellectual task that a human can. Achieving AGI remains a long-term goal in AI research, as it involves creating machines with human-like cognitive abilities across a wide range of domains.

Applied AI

Applied AI, also known as narrow Artificial General Intelligence, focuses on developing Artificial General Intelligence systems for specific tasks or domains. Examples include speech recognition, recommendation systems, and autonomous vehicles. Applied AI has seen significant advancements and widespread adoption in various industries.

Cognitive Simulation

Cognitive simulation involves creating Artificial Intelligence models that simulate human cognitive processes like learning, reasoning, and problem-solving. These simulations aim to understand and replicate human intelligence, providing insights into how the mind works and informing the development of AI systems.

The Origins of AI

Alan Turing and the Beginning of Artificial Intelligence

Alan Turing, a pioneering mathematician and computer scientist, laid the groundwork for AI with his theoretical work on computation and intelligence. His seminal paper “Computing Machinery and Intelligence” introduced the concept of machine intelligence and proposed the famous Turing Test as a measure of AI capabilities.

Theoretical Work and Early Milestones

Early AI research saw significant theoretical developments, including the exploration of logical reasoning, problem-solving algorithms, and the foundations of AI programming languages. These foundational concepts paved the way for the development of AI systems that could mimic human cognitive processes.

The Turing Test

The Turing Test, proposed by Alan Turing in 1950, is a benchmark for evaluating a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human. This test remains a fundamental concept in AI research and has influenced the development of AI systems that can engage in natural language dialogue and reasoning.

Early Developments in AI

The First AI Programs

The early days of AI saw the creation of the first AI programs designed to perform specific tasks, such as logical reasoning, pattern recognition, and game playing. These programs laid the foundation for further advancements in AI research and the development of more sophisticated AI systems.

Evolutionary Computing

Evolutionary computing, inspired by biological evolution, involves algorithms that mimic the process of natural selection to optimize solutions to complex problems. Genetic algorithms and evolutionary strategies are examples of evolutionary computing techniques used in AI applications.

Logical Reasoning and Problem Solving

AI systems have been developed to perform logical reasoning and problem-solving tasks, such as theorem proving, planning, and decision-making. These capabilities are essential for developing AI systems that can autonomously solve complex problems in various domains.

English Dialogue

Early AI research explored the challenges of natural language understanding and dialogue systems. Programs like ELIZA, developed in the 1960s, demonstrated early attempts at simulating human conversation and engaging in text-based interactions.

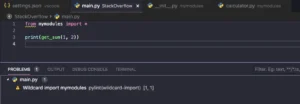

AI Programming Languages

The development of specialized AI programming languages, such as LISP and Prolog, facilitated the implementation of AI algorithms and systems. These languages provided tools and frameworks for researchers and developers to create AI applications and experiment with different approaches to AI problem-solving.

Microworld Programs

Microworld programs, like SHRDLU developed by Terry Winograd in the 1970s, simulated small-scale environments where AI systems could interact with objects and perform tasks. These programs helped researchers study how AI systems could understand and manipulate objects in a controlled setting, advancing the field of AI research.This section provides an overview of the methods and goals in AI, the origins of AI with a focus on Alan Turing’s contributions, early developments in AI including the first AI programs and evolutionary computing, and key milestones in AI research such as the Turing Test and microworld programs.

Knowledge-Based Systems

Expert Systems

Expert systems are AI programs that emulate the decision-making abilities of a human expert in a specific domain. These systems use knowledge bases and inference engines to provide expert-level advice or solutions to complex problems. They have been applied in various fields, from medical diagnosis to financial analysis.

DENDRAL and MYCIN

DENDRAL and MYCIN were pioneering expert systems developed in the 1960s and 1970s. DENDRAL was designed for chemical analysis, while MYCIN focused on medical diagnosis. These systems demonstrated the potential of knowledge-based systems to replicate human expertise and make accurate decisions based on domain-specific knowledge.

The CYC Project

The CYC project, initiated in the 1980s, aimed to create a comprehensive knowledge base of common-sense reasoning. CYC sought to capture human-like reasoning abilities and enable AI systems to understand context, infer implicit knowledge, and make intelligent decisions based on a broad range of information.

The Rise of Connectionism

Creating Artificial Neural Networks

Connectionism, also known as neural network theory, involves building artificial neural networks that mimic the structure and function of the human brain. These networks consist of interconnected nodes that process information and learn from data through iterative adjustments to connection strengths.

Perceptrons

Perceptrons are the simplest form of artificial neural networks, capable of binary classification tasks. They paved the way for more complex neural network architectures and demonstrated the potential of connectionist approaches in pattern recognition and machine learning.

Conjugating Verbs

Neural networks have been used to model complex linguistic tasks, such as conjugating verbs in natural language processing. By learning patterns from data, neural networks can generate grammatically correct verb forms and demonstrate language understanding capabilities.

Other Neural Network Models

Beyond perceptrons, various neural network models have been developed, including convolutional neural networks (CNNs) for image recognition, recurrent neural networks (RNNs) for sequential data processing, and transformer models like MetaLlama 3 for large-scale language modeling tasks.

New Foundations in AI

Nouvelle AI

Nouvelle AI represents a fresh approach to Artificial General Intelligence research, emphasizing interdisciplinary collaboration, ethical considerations, and human-centered design principles. This new foundation aims to address societal challenges, promote transparency in AI systems, and prioritize the well-being of individuals and communities.

The Situated Approach

The situated approach in AI focuses on embedding Artificial General Intelligence systems in real-world contexts to enhance their understanding of the environment and improve decision-making. By considering the situational context, AI systems can adapt to dynamic conditions and interact more effectively with users.

AI in the 21st Century

Machine Learning

Machine learning has emerged as a dominant paradigm in Artificial General Intelligence, enabling systems to learn from data and improve performance on tasks without explicit programming. Techniques like deep learning have revolutionized fields such as computer vision, natural language processing, and speech recognition.

Autonomous Vehicles

AI technologies power autonomous vehicles, enabling them to perceive the environment, make real-time decisions, and navigate safely without human intervention. Self-driving cars represent a significant application of AI in transportation and are poised to transform the automotive industry.

Large Language Models and Natural Language Processing

Large language models, such as MetaLlama 3, have demonstrated remarkable capabilities in natural language understanding and generation. These models leverage vast amounts of text data to achieve human-level performance in tasks like language translation, text generation, and sentiment analysis.

Virtual Assistants

Virtual assistants like Siri, Alexa, and Google Assistant leverage Artificial General Intelligence technologies to provide personalized assistance, answer queries, and perform tasks based on user interactions. These AI-powered assistants have become integral parts of daily life, enhancing productivity and convenience.

Risks and Challenges

Potential Risks of AI

As Artificial General Intelligence technologies advance, concerns about potential risks and ethical implications have emerged. Issues such as bias in AI algorithms, job displacement due to automation, and privacy concerns related to data collection pose challenges that must be addressed to ensure the responsible development and deployment of AI systems.

The Possibility of Artificial General Intelligence (AGI)

The concept of Artificial General Intelligence (AGI), where machines possess human-like cognitive abilities across diverse domains, raises questions about the implications of creating superintelligent systems. The pursuit of AGI poses challenges related to control, safety, and the societal impact of highly intelligent machines.

In conclusion, the evolution of Artificial General Intelligence from knowledge-based systems to connectionist approaches, the development of new foundations in Artificial General Intelligence research, and the widespread adoption of AI technologies in the 21st century highlight the transformative potential of artificial intelligence. While AI offers numerous opportunities for innovation and progress, addressing risks and challenges is essential to ensure the responsible and ethical advancement of AI for the benefit of society. The future outlook for AI is promising, with continued advancements in machine learning, autonomous systems, natural language processing, and the potential for AI to positively impact various industries and aspects of daily life.

FAQs

What is Artificial Intelligence (AI)?

Artificial Intelligence (AI) refers to the simulation of human intelligence processes by machines, particularly computer systems. It involves the development of algorithms and models that enable machines to perform tasks that typically require human intelligence, such as learning, reasoning, problem-solving, perception, and language understanding.

How is intelligence defined in the context of AI?

Intelligence can be defined as the ability to acquire and apply knowledge, solve problems, adapt to new situations, and learn from experience. In AI, intelligence refers to the ability of machines to perform cognitive tasks that typically require human intelligence, such as learning, reasoning, problem-solving, perception, and language understanding.

What are the main approaches in AI?

In AI research and development, two main approaches are symbolic AI and connectionist AI. Symbolic AI focuses on manipulating symbols according to predefined rules, while connectionist AI involves building artificial neural networks that can learn from data through interconnected nodes.

How has AI evolved in the 21st century?

In the 21st century, AI has seen significant advancements in machine learning, autonomous systems, natural language processing, and robotics. Applications like autonomous vehicles, virtual assistants, and large language models have become increasingly prevalent, shaping various industries and aspects of daily life.

What are the potential risks and challenges associated with AI?

As AI technologies advance, concerns about potential risks and ethical implications have emerged. Issues such as bias in AI algorithms, job displacement due to automation, and privacy concerns related to data collection pose challenges that must be addressed to ensure the responsible development and deployment of AI systems.