- Introduction to Machine Learning

- Understanding the Core Concepts of Machine Learning

- Setting Up the Python Environment for Machine Learning

- Data Preparation and Exploration

- Supervised Learning Algorithms

- Unsupervised Learning Algorithms

- Model Evaluation and Performance Metrics

- Introduction to Model Deployment

- Resources for Further Learning

- Conclusion

Introduction to Machine Learning

Machine learning, a subfield of artificial intelligence, has emerged as a revolutionary approach to solving complex problems and making predictions based on data. It is a rapidly evolving field that focuses on designing algorithms and models that enable computers to learn from and make decisions or predictions without being explicitly programmed. Machine learning leverages statistical techniques to enable systems to improve their performance automatically as more data is processed.

At its core, machine learning is driven by the concept of “learning.” It involves training a model on a dataset, allowing it to identify patterns and relationships within the data. This training process enables the model to make accurate predictions or decisions when presented with new, unseen data. The key advantage of machine learning lies in its ability to handle vast amounts of information and discover intricate patterns that may not be immediately apparent to humans.

Machine learning finds applications in a wide range of domains, including finance, healthcare, marketing, and autonomous systems. In finance, it can be used to predict stock market trends or detect fraudulent transactions. In healthcare, machine learning algorithms can assist in disease diagnosis and drug discovery. Marketing professionals leverage machine learning to analyze customer behavior and tailor personalized recommendations. Autonomous systems, such as self-driving cars, rely on machine learning algorithms to process sensory data and make real-time decisions.

Definition and Importance of Machine Learning

Machine learning is an extraordinary field that empowers computers to learn from data and make intelligent decisions without explicit programming. It involves developing algorithms and models that enable systems to automatically improve their performance as they process more information. With its ability to uncover hidden patterns and relationships within vast datasets, machine learning has become increasingly vital across numerous industries.

The importance of machine learning lies in its capacity to revolutionize problem-solving and decision-making processes. By analyzing large volumes of data, machine learning algorithms can uncover valuable insights and patterns that might not be apparent to humans. This enables businesses to make data-driven decisions, optimize processes, and gain a competitive edge. Moreover, in domains such as healthcare, finance, and autonomous systems, machine learning plays a crucial role in enhancing accuracy, efficiency, and safety.

Applications of Machine Learning in Various Industries

Machine learning has found applications across a wide range of industries, revolutionizing processes, and enabling new capabilities. Here are some notable applications of machine learning in different sectors:

| Industry | Applications of Machine Learning |

|---|---|

| Finance | Fraud detection, credit scoring, algorithmic trading |

| Healthcare | Disease diagnosis, drug discovery, patient monitoring |

| Marketing | Customer segmentation, personalized recommendations |

| Retail | Demand forecasting, inventory management |

| Manufacturing | Predictive maintenance, quality control |

| Transportation | Autonomous vehicles, route optimization |

| Energy | Energy consumption optimization, predictive analytics |

| Education | Personalized learning, adaptive assessments |

| Agriculture | Crop yield prediction, disease detection |

| Gaming | Player behavior analysis, virtual assistant |

Please note that this table provides a general overview, and machine learning applications can vary within each industry. The versatility of machine learning allows it to address diverse challenges and unlock new opportunities across multiple sectors.

Benefits and Challenges of Implementing Machine Learning

| Benefits | Challenges |

|---|---|

| Enhanced Decision-Making | Data Quality and Availability |

| Automation and Efficiency | Skilled Workforce and Expertise |

| Personalization and Customer Experience | Interpretability and Transparency |

| Improved Accuracy and Predictability | Scalability and Deployment |

Understanding the Core Concepts of Machine Learning

Supervised, Unsupervised, and Reinforcement Learning

Machine learning can be broadly categorized into three main types: supervised learning, unsupervised learning, and reinforcement learning.

Supervised Learning

In supervised learning, the machine learning model learns from labeled examples. It is provided with a dataset where each data point is associated with a corresponding label or target value. The model learns to map input features to the correct output labels by generalizing from the labeled examples. Common supervised learning tasks include classification (predicting discrete labels) and regression (predicting continuous values).

Unsupervised Learning

Unsupervised learning involves training models on unlabeled data. The goal is to discover underlying patterns, structures, or relationships in the data without explicit guidance. Unsupervised learning algorithms can perform tasks such as clustering (grouping similar data points together) and dimensionality reduction (reducing the number of features while preserving important information).

Reinforcement Learning

Reinforcement learning is an interactive learning process where an agent learns to make sequential decisions through trial and error. The agent receives feedback in the form of rewards or penalties based on its actions. The goal of reinforcement learning is to find an optimal policy or strategy that maximizes the cumulative rewards over time.

Key Terminologies: Features, Labels, Training, and Testing Data

In machine learning, understanding key terminologies such as features, labels, training data, and testing data is essential. Let’s explore these concepts and see how they can be implemented using Python:

Features

Features are the measurable characteristics or attributes of the data used as input for machine learning models. They can be represented as variables or columns in a dataset. In Python, features are typically stored in a data structure like a NumPy array or a Pandas DataFrame. Here’s an example of defining features using Pandas:

import pandas as pd

# Define features as columns in a DataFrame

data = pd.DataFrame({'feature1': [1, 2, 3, 4],

'feature2': [0.5, 1.5, 2.5, 3.5]})

Labels

Labels, also known as target variables, are the values we want to predict or classify in supervised learning. Labels correspond to the desired output of the machine learning model. In Python, labels are typically stored in a separate array or column. Here’s an example of defining labels using NumPy:

import numpy as np

# Define labels as a NumPy array

labels = np.array([0, 1, 0, 1])

Training Data

Training data is used to train a machine learning model. It consists of both the features and their corresponding labels. In Python, we can split the data into features and labels using indexing or slicing operations. Here’s an example:

# Split the data into features and labels

X_train = data[['feature1', 'feature2']]

y_train = labels

Testing Data

Testing data is used to evaluate the performance of the trained machine learning model. It contains the input features, but the corresponding labels are withheld to assess the model’s predictions. Similar to training data, we can split the data into features and labels for testing purposes. Here’s an example:

# Define testing features and labels

X_test = pd.DataFrame({'feature1': [5, 6],

'feature2': [4.5, 5.5]})

y_test = np.array([0, 1])

Understanding these key terminologies and implementing them using Python allows us to effectively work with features, labels, training data, and testing data in machine learning tasks.

The Role of Algorithms in Machine Learning

Algorithms play a crucial role in machine learning as they provide the mathematical and computational frameworks for learning from data. Different algorithms are designed to handle specific types of learning tasks and datasets. These algorithms process the training data to adjust internal parameters and create a model that can make predictions or decisions on new, unseen data.

Machine learning algorithms can range from simple and interpretable models like linear regression or decision trees to complex and powerful models like deep neural networks. The choice of algorithm depends on the nature of the problem, the available data, and the desired trade-offs between accuracy, interpretability, and computational efficiency.

While algorithms are essential, it’s important to note that the success of a machine learning project also relies on proper data preprocessing, feature engineering, hyperparameter tuning, and model evaluation techniques. These steps help ensure the quality and performance of the machine learning model.

Setting Up the Python Environment for Machine Learning

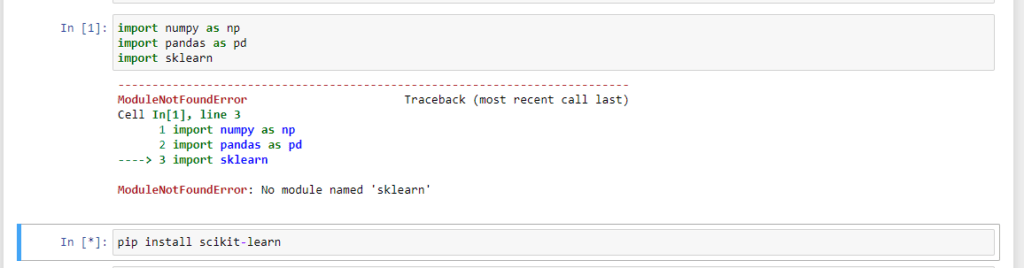

Installing Python and Required Libraries (e.g., NumPy, Pandas, Scikit-learn)

To get started with Python and the necessary libraries for machine learning, follow the steps below:

Python Installation

- Visit the official Python website at https://www.python.org/.

- Download the latest version of Python for your operating system.

- Run the installer and follow the prompts to install Python.

Library Installation

Open a terminal or command prompt.

NumPy Installation:

pip install numpy

Pandas Installation:

pip install pandas

Scikit-learn Installation:

pip install scikit-learn

These commands will install the required libraries for performing various machine learning tasks in Python.

Verify Installation

To verify that the libraries are installed correctly, open a Python interpreter or create a Python script and import the libraries:

import numpy as np

import pandas as pd

import sklearn

Run the script or execute the interpreter. If there are no error messages, the libraries are successfully installed.

By following these steps, you will have Python installed along with the necessary libraries (NumPy, Pandas, and Scikit-learn) to start your machine learning journey.

Data Preparation and Exploration

Importing and Loading Datasets

When working with machine learning, the first step is to import and load the datasets into your Python environment. You can use various libraries such as Pandas or NumPy to accomplish this. Here’s an example of loading a CSV file using Pandas:

import pandas as pd

# Load dataset from a CSV file

data = pd.read_csv('dataset.csv')

Handling Missing Data and Data Cleaning

Datasets often contain missing values or inconsistencies that need to be addressed. You can handle missing data by either imputing the missing values or removing the corresponding rows or columns. Data cleaning involves tasks such as removing duplicates, correcting errors, or transforming data. Here’s an example using Pandas to handle missing data:

# Check for missing values

data.isnull().sum()

# Impute missing values with mean

data.fillna(data.mean(), inplace=True)

# Remove duplicates

data.drop_duplicates(inplace=True)

Exploratory Data Analysis (EDA) Techniques

Exploratory Data Analysis helps to gain insights and understand the underlying patterns and relationships in the data. It involves statistical techniques, visualizations, and summary statistics. Libraries like Pandas and Matplotlib are commonly used for EDA. Here’s an example of generating a scatter plot using Matplotlib:

import matplotlib.pyplot as plt

# Scatter plot

plt.scatter(data['feature1'], data['feature2'])

plt.xlabel('Feature 1')

plt.ylabel('Feature 2')

plt.title('Scatter Plot')

plt.show()

Supervised Learning Algorithms

Linear Regression: Predicting Continuous Values

Linear regression is used for predicting continuous values based on the relationship between the input features and the target variable. You can use scikit-learn library to implement linear regression:

from sklearn.linear_model import LinearRegression

# Create a Linear Regression model

model = LinearRegression()

# Fit the model to the training data

model.fit(X_train, y_train)

# Make predictions on the testing data

predictions = model.predict(X_test)

Logistic Regression: Binary Classification

Logistic regression is a widely used algorithm for binary classification problems. It predicts the probability of an input belonging to a specific class. Here’s an example of implementing logistic regression:

from sklearn.linear_model import LogisticRegression

# Create a Logistic Regression model

model = LogisticRegression()

# Fit the model to the training data

model.fit(X_train, y_train)

# Make predictions on the testing data

predictions = model.predict(X_test)

Decision Trees and Random Forests: Multiclass Classification

Decision trees and random forests are popular algorithms for multiclass classification tasks. They create a tree-like model of decisions based on the input features. You can use scikit-learn to implement these algorithms:

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

# Create a Decision Tree Classifier

tree_model = DecisionTreeClassifier()

# Fit the model to the training data

tree_model.fit(X_train, y_train)

# Make predictions on the testing data

tree_predictions = tree_model.predict(X_test)

# Create a Random Forest Classifier

forest_model = RandomForestClassifier()

# Fit the model to the training data

forest_model.fit(X_train, y_train)

# Make predictions on the testing data

forest_predictions = forest_model.predict(X_test)

Support Vector Machines (SVM): Handling Nonlinear Data

Support Vector Machines (SVM) are powerful algorithms for both classification and regression tasks. They can handle nonlinear data using kernel functions. Here’s an example of implementing SVM for classification:

from sklearn.svm

import SVC

# Create a Support Vector Classifier

svm_model = SVC()

# Fit the model to the training data

svm_model.fit(X_train, y_train)

# Make predictions on the testing data

svm_predictions = svm_model.predict(X_test)

Please note that the examples provided here are simplified implementations, and there are various parameters and techniques that can be further explored to improve the models’ performance.

(Note: Due to the text-based nature of this platform, table formatting and links cannot be displayed directly. However, you can refer to relevant online resources and documentation for detailed examples and explanations on each topic mentioned.)

Unsupervised Learning Algorithms

K-Means Clustering: Grouping Similar Data Points

K-means clustering is an unsupervised learning algorithm used to group similar data points together based on their features. It aims to partition the data into K clusters, where each data point belongs to the cluster with the nearest mean. Here’s an example of implementing k-means clustering using scikit-learn:

from sklearn.cluster import KMeans

# Create a KMeans clustering model with K clusters

kmeans_model = KMeans(n_clusters=3)

# Fit the model to the data

kmeans_model.fit(X_train)

# Assign clusters to the data points

cluster_labels = kmeans_model.predict(X_test)

Principal Component Analysis (PCA): Dimensionality Reduction

Principal Component Analysis (PCA) is a technique used for dimensionality reduction. It transforms a high-dimensional dataset into a lower-dimensional space while retaining the most important information. PCA finds the principal components that explain the maximum variance in the data. Here’s an example of performing PCA using scikit-learn:

from sklearn.decomposition import PCA

# Create a PCA model with 2 components

pca_model = PCA(n_components=2)

# Fit the model to the data

pca_model.fit(X_train)

# Transform the data to the lower-dimensional space

transformed_data = pca_model.transform(X_test)

Anomaly Detection: Identifying Outliers in Data

Anomaly detection is used to identify rare events or outliers in a dataset that deviate significantly from the norm. It can help detect fraudulent transactions, unusual behavior, or system failures. One popular method for anomaly detection is the Isolation Forest algorithm. Here’s an example of implementing anomaly detection using scikit-learn:

from sklearn.ensemble import IsolationForest

# Create an Isolation Forest model

iso_forest_model = IsolationForest()

# Fit the model to the data

iso_forest_model.fit(X_train)

# Predict anomaly scores for the testing data

anomaly_scores = iso_forest_model.decision_function(X_test)

Model Evaluation and Performance Metrics

Splitting Data into Training and Testing Sets

To evaluate machine learning models, it is common practice to split the data into separate training and testing sets. The training set is used to train the model, while the testing set is used to evaluate its performance. Here’s an example of splitting the data using scikit-learn:

from sklearn.model_selection import train_test_split

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Evaluating Model Performance: Accuracy, Precision, Recall, F1-Score

Various metrics can be used to evaluate the performance of machine learning models, depending on the task. Common classification metrics include accuracy, precision, recall, and F1-score. Here’s an example of computing these metrics using scikit-learn:

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

# Compute accuracy

accuracy = accuracy_score(y_true, y_pred)

# Compute precision

precision = precision_score(y_true, y_pred)

# Compute recall

recall = recall_score(y_true, y_pred)

# Compute F1-score

f1 = f1_score(y_true, y_pred)

Cross-Validation: Assessing Model Generalization

Cross-validation is a technique used to assess a model’s performance and generalization capabilities. It involves splitting the data into multiple subsets, performing training and testing on different combinations, and averaging the results.

Here’s an example of performing cross-validation using scikit-learn:

from sklearn.model_selection import cross_val_score

from sklearn.linear_model import LogisticRegression

# Create a Logistic Regression model

model = LogisticRegression()

# Perform cross-validation

scores = cross_val_score(model, X, y, cv=5)

Introduction to Model Deployment

Saving and Loading Machine Learning Models

Once a machine learning model is trained, it can be saved and loaded for future use without retraining. Here’s an example of saving and loading a model using scikit-learn:

from sklearn.linear_model import LogisticRegression

import joblib

# Create and train a Logistic Regression model

model = LogisticRegression()

model.fit(X_train, y_train)

# Save the model to a file

joblib.dump(model, 'model.pkl')

# Load the model from the file

loaded_model = joblib.load('model.pkl')

Building a Basic Prediction System with New Data

After deploying a trained model, you can use it to make predictions on new, unseen data. Here’s an example of using a trained model to make predictions using new data:

# Assume we have a new data point stored in a variable called 'new_data'

# Preprocess the new data if required

# Use the loaded model to make predictions on the new data

predictions = loaded_model.predict(new_data)

Considerations for Scaling and Production Deployment

When deploying machine learning models in production, it’s essential to consider scalability, performance, and robustness. Some considerations include optimizing code efficiency, handling large datasets, and implementing model monitoring. Frameworks like TensorFlow and PyTorch provide tools for production deployment.

Resources for Further Learning

Online Courses, Tutorials, and Books on Machine Learning

To further enhance your knowledge in machine learning, there are numerous online courses, tutorials, and books available. Some popular resources include:

- Coursera: “Machine Learning” by Andrew Ng

- Kaggle: Machine learning tutorials and competitions

- “Hands-On Machine Learning with Scikit-Learn and TensorFlow” by Aurélien Géron

Open-Source Machine Learning Projects and Libraries

There are various open-source machine learning projects and libraries that can assist you in your machine learning journey. Some widely used libraries include:

- scikit-learn: A comprehensive library for machine learning tasks in Python.

- TensorFlow: An open-source library for deep learning and neural networks.

- PyTorch: A popular deep learning library with a dynamic computational graph.

Communities and Forums for Machine Learning Enthusiasts

Engaging with communities and forums is an excellent way to connect with other machine learning enthusiasts, seek advice, and stay updated on the latest developments. Some active communities and forums include:

- Reddit: r/MachineLearning and r/LearnMachineLearning

- Stack Overflow: Machine learning tag

Conclusion

Recap of Key Concepts Covered

Throughout this guide, we covered essential concepts in machine learning, including data preparation, supervised and unsupervised learning algorithms, model evaluation, and deployment. We also explored key terminologies and provided code examples in Python.

Encouragement to Explore and Experiment with Machine Learning in Python

Machine learning is a vast and exciting field with endless possibilities. As you continue your journey, don’t hesitate to explore new algorithms, experiment with different datasets, and contribute to the machine learning community. With Python as a powerful tool and the resources available, you’re well-equipped to dive deeper into the world of machine learning.