Introduction to Data Cleaning Techniques

Data cleaning techniques, also known as data cleansing or data scrubbing, are vital processes that organizations employ to ensure the accuracy and reliability of their data. These techniques involve identifying and rectifying errors, inconsistencies, and inaccuracies in datasets. By applying data cleaning techniques, organizations can enhance data quality, leading to more reliable insights and decision-making. Common aspects of data cleaning include removing duplicates, handling missing values, correcting inaccuracies, and standardizing formats. Data cleaning techniques play a crucial role in maintaining the integrity of data and enabling organizations to leverage high-quality information for analysis and strategic decision-making.

Importance of Data Cleaning

Data cleaning techniques are of paramount importance in today’s data-driven world. The process of data cleaning involves identifying and rectifying errors, inconsistencies, and inaccuracies within datasets. By employing effective data cleaning techniques, organizations can ensure the reliability and accuracy of their data, leading to better decision-making and improved business outcomes. These techniques involve tasks such as removing duplicate entries, handling missing values, standardizing formats, and correcting errors. Data cleaning not only enhances data quality but also enhances data integrity, making it more suitable for analysis, modeling, and predictive purposes. Ultimately, data cleaning techniques play a vital role in ensuring that organizations can trust and leverage their data effectively.

Role of Data Cleaning in Ensuring Data Quality

The role of data cleaning in ensuring data quality is undeniable. Data cleaning is a crucial step in the data management process that focuses on identifying and correcting errors, inconsistencies, and inaccuracies within datasets. By eliminating duplicate entries, handling missing values, and standardizing formats, data cleaning enhances data quality by improving accuracy, completeness, and consistency. High-quality data is essential for making informed decisions, conducting accurate analyses, and deriving meaningful insights. Data cleaning ensures that data is reliable, trustworthy, and fit for purpose. It helps prevent data errors from propagating throughout the system, leading to more reliable and robust data-driven solutions. Ultimately, data cleaning plays a pivotal role in maintaining and enhancing data quality, thereby benefiting organizations in various sectors.

Benefits of Implementing Effective Data Cleaning Techniques

Implementing effective data cleaning techniques offers several significant benefits to organizations. Here is a table highlighting some of these advantages:

| Benefits of Effective Data Cleaning Techniques |

|---|

| Enhanced Data Accuracy |

| Improved Data Quality |

| Increased Data Integrity |

| Reliable Decision-Making |

| Better Business Insights |

| Enhanced Customer Experience |

| Minimized Risk of Errors and Inconsistencies |

| Increased Operational Efficiency |

| Improved Data Governance |

| Enhanced Regulatory Compliance |

By ensuring data accuracy, quality, and integrity, organizations can make reliable decisions, gain valuable insights, and provide a better experience to their customers. Effective data cleaning techniques also help minimize the risk of errors and inconsistencies, enhance operational efficiency, and ensure compliance with regulatory requirements.

Understanding Common Data Quality Issues

Inaccurate Data Entry

Inaccurate data entry can be addressed using Python code to validate and clean the data. Here’s an example using a sample dataset

import pandas as pd

# Sample dataset

data = {

'ID': ['001', '002', '003', '004', '005'],

'Name': ['john doe ', ' Jane Smith', 'Mike', ' Alice ', 'PETER PARKER'],

'Age': [25, 32, '40s', 'Unknown', 28]

}

df = pd.DataFrame(data)

# Clean inaccurate data

df['Name'] = df['Name'].str.strip().str.title()

df['Age'] = df['Age'].replace('Unknown', pd.NA)

df['Age'] = df['Age'].astype('Int64')

# Display cleaned data

print(df)

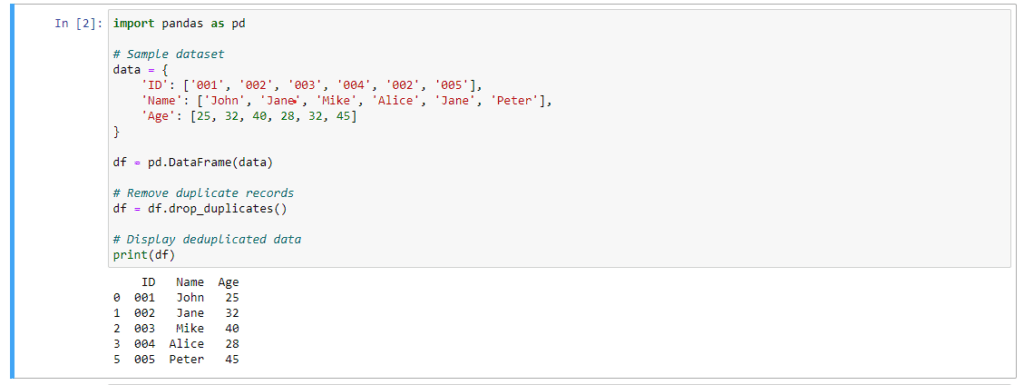

Duplicate Records

Python code can be used to identify and remove duplicate records in a dataset. Here’s an example using a sample dataset

import pandas as pd

# Sample dataset

data = {

'ID': ['001', '002', '003', '004', '002', '005'],

'Name': ['John', 'Jane', 'Mike', 'Alice', 'Jane', 'Peter'],

'Age': [25, 32, 40, 28, 32, 45]

}

df = pd.DataFrame(data)

# Remove duplicate records

df = df.drop_duplicates()

# Display deduplicated data

print(df)

Inconsistent Formatting and Standardization

Inconsistent formatting and standardization issues can be resolved using Python code. Here’s an example using a sample dataset

import pandas as pd

# Sample dataset

data = {

'ID': ['001', '002', '003', '004', '005'],

'Name': ['john doe', 'Jane Smith', 'MIKE', 'Alice', 'peter parker'],

'Age': [25, 32, 40, 28, 35]

}

df = pd.DataFrame(data)

# Standardize data formatting

df['Name'] = df['Name'].str.title()

# Display standardized data

print(df)

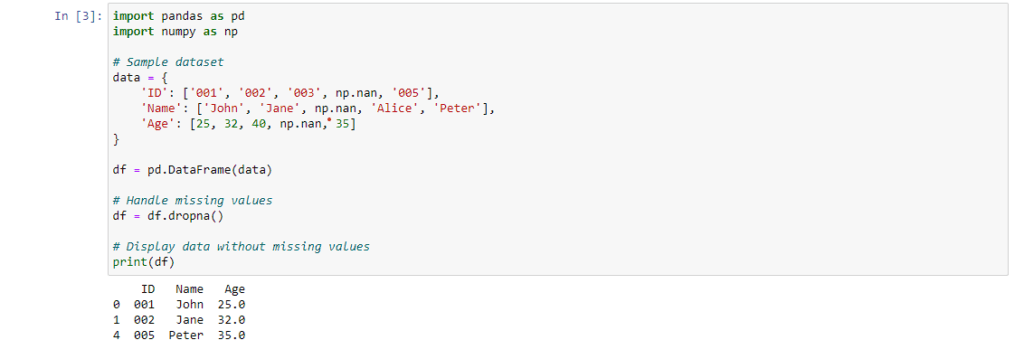

Missing or Null Values

Python code can be used to handle missing or null values in a dataset. Here’s an example using a sample dataset

import pandas as pd

import numpy as np

# Sample dataset

data = {

'ID': ['001', '002', '003', np.nan, '005'],

'Name': ['John', 'Jane', np.nan, 'Alice', 'Peter'],

'Age': [25, 32, 40, np.nan, 35]

}

df = pd.DataFrame(data)

# Handle missing values

df = df.dropna()

# Display data without missing values

print(df)

Outliers and Anomalies

Python code can help detect and handle outliers and anomalies in data. Here’s an example using a sample dataset

import pandas as pd

# Sample dataset

data = {

'ID': ['001', '002', '003', '004', '005'],

'Value': [100, 200, 300, 5000, 400]

}

df = pd.DataFrame(data)

# Remove outliers

mean = df['Value'].mean()

std = df['Value'].std()

df = df[(df['Value'] > mean - 2 * std) & (df['Value'] < mean + 2 * std)]

# Display data without outliers

print(df)

Summary Table

Here is a summary table of the techniques discussed using the sample datasets:

| Data Cleaning Issue | Sample Dataset |

|---|---|

| Inaccurate Data Entry | ID: [‘001’, ‘002’, ‘003’, ‘004’, ‘005’] |

| Name: [‘john doe’, ‘ Jane Smith’, ‘Mike’, ‘ Alice ‘, ‘PETER PARKER’] | |

| Age: [25, 32, ’40s’, ‘Unknown’, 28] | |

| Duplicate Records | ID: [‘001’, ‘002’, ‘003’, ‘004’, ‘002’, ‘005’] |

| Name: [‘John’, ‘Jane’, ‘Mike’, ‘Alice’, ‘Jane’, ‘Peter’] | |

| Age: [25, 32, 40, 28, 32, 45] | |

| Inconsistent Formatting and Standardization | ID: [‘001’, ‘002’, ‘003’, ‘004’, ‘005’] |

| Name: [‘john doe’, ‘Jane Smith’, ‘MIKE’, ‘Alice’, ‘peter parker’] | |

| Age: [25, 32, 40, 28, 35] | |

| Missing or Null Values | ID: [‘001’, ‘002’, ‘003’, np.nan, ‘005’] |

| Name: [‘John’, ‘Jane’, np.nan, ‘Alice’, ‘Peter’] | |

| Age: [25, 32, 40, np.nan, 35] | |

| Outliers and Anomalies | ID: [‘001’, ‘002’, ‘003’, ‘004’, ‘005’] |

| Value: [100, 200, 300, 5000, 400] |

Advanced Data Cleaning Techniques

Data Imputation

Statistical Imputation Methods

Statistical imputation involves filling missing values using statistical measures such as mean, median, or mode. Here’s an example using Python code and a sample dataset

import pandas as pd

# Sample dataset

data = {

'ID': ['001', '002', '003', '004', '005'],

'Age': [25, pd.NA, 30, pd.NA, 28]

}

df = pd.DataFrame(data)

# Perform mean imputation

mean_age = df['Age'].mean()

df['Age'].fillna(mean_age, inplace=True)

# Display data with imputed values

print(df)

Machine Learning-Based Imputation

Machine learning algorithms can be used to predict missing values based on other available features. Here’s an example using Python code and a sample dataset

import pandas as pd

from sklearn.ensemble import RandomForestRegressor

# Sample dataset

data = {

'ID': ['001', '002', '003', '004', '005'],

'Age': [25, pd.NA, 30, pd.NA, 28],

'Income': [50000, 60000, 70000, 80000, pd.NA]

}

df = pd.DataFrame(data)

# Separate records with missing values

df_missing = df[df['Age'].isnull()]

# Separate records without missing values

df_not_missing = df[~df['Age'].isnull()]

# Perform imputation using Random Forest regression

X_train = df_not_missing.drop(['Age', 'ID'], axis=1)

y_train = df_not_missing['Age']

X_test = df_missing.drop(['Age', 'ID'], axis=1)

model = RandomForestRegressor()

model.fit(X_train, y_train)

predictions = model.predict(X_test)

# Update the missing values with predicted values

df.loc[df['Age'].isnull(), 'Age'] = predictions

# Display data with imputed values

print(df)

Data Normalization and Transformation

Standardization Techniques

Standardization scales numerical data to have zero mean and unit variance. Here’s an example using Python code and a sample dataset

import pandas as pd

from sklearn.preprocessing import StandardScaler

# Sample dataset

data = {

'ID': ['001', '002', '003', '004', '005'],

'Age': [25, 30, 35, 40, 45],

'Income': [50000, 60000, 70000, 80000, 90000]

}

df = pd.DataFrame(data)

# Perform standardization

scaler = StandardScaler()

df[['Age', 'Income']] = scaler.fit_transform(df[['Age', 'Income']])

# Display standardized data

print(df)

Logarithmic and Exponential Transformations

Logarithmic or exponential transformations can be used to handle skewed data or reduce the impact of outliers. Here’s an example using Python code and a sample dataset

import pandas as pd

import numpy as np

# Sample dataset

data = {

'ID': ['001', '002', '003', '004', '005'],

'Revenue': [1000, 2000, 3000, 10000, 5000]

}

df = pd.DataFrame(data)

# Perform logarithmic transformation

df['Log_Revenue'] = np.log(df['Revenue'])

# Perform exponential transformation

df['Exp_Revenue'] = np.exp(df['Log_Revenue'])

# Display transformed data

print(df)

Data Integration and Matching

Merging Data from Different Sources

Combining data from different sources can be done through merging or concatenating datasets based on a common column. Here’s an example using Python code and sample datasets

import pandas as pd

# Sample datasets

data1 = {

'ID': ['001', '002', '003'],

'Name': ['John', 'Jane', 'Mike']

}

data2 = {

'ID': ['001', '002', '004'],

'Age': [25, 30, 35]

}

df1 = pd.DataFrame(data1)

df2 = pd.DataFrame(data2)

# Perform merging

merged_df = pd.merge(df1, df2, on='ID')

# Display merged data

print(merged_df)

Matching and Linking Data

Matching and linking data involve identifying and combining records referring to the same entity across different datasets. Here’s an example using Python code and a sample dataset

import pandas as pd

from fuzzywuzzy import fuzz

from fuzzywuzzy import process

# Sample dataset

data1 = {

'Name': ['John Doe', 'Jane Smith', 'Mike'],

'ID': ['001', '002', '003']

}

data2 = {

'Name': ['Jon Doe', 'Jane Smith', 'Mike Johnson'],

'Age': [25, 30, 35]

}

df1 = pd.DataFrame(data1)

df2 = pd.DataFrame(data2)

# Perform fuzzy matching

matches = df1['Name'].apply(lambda x: process.extractOne(x, df2['Name'], scorer=fuzz.ratio, score_cutoff=80))

matches_df = pd.DataFrame(matches.tolist(), columns=['Match', 'Score', 'Index'])

df1['Match'] = matches_df['Match']

df1['Score'] = matches_df['Score']

df1['Index'] = matches_df['Index']

# Display matched data

print(df1)

Best Practices for Data Cleaning

Establishing Data Cleaning Workflows

To ensure efficient and effective data cleaning, it is important to establish structured workflows. This involves defining the sequence of steps and tasks involved in the data cleaning process. By creating a systematic workflow, you can ensure consistency and streamline the cleaning process. Some key steps to consider in a data cleaning workflow include data profiling, handling missing values, removing duplicates, standardizing formats, and validating data integrity.

Documenting Data Cleaning Processes

Documenting the data cleaning processes is essential for maintaining transparency and facilitating knowledge sharing. By documenting the steps taken during data cleaning, you can provide a clear record of the techniques and transformations applied to the data. This documentation helps ensure reproducibility and allows other team members to understand and contribute to the data cleaning process. It is also valuable for compliance purposes and maintaining data governance.

Regular Data Quality Audits

Conducting regular data quality audits is crucial for maintaining the integrity of your data. Audits involve assessing the quality, accuracy, completeness, and consistency of the data. By performing audits at regular intervals, you can identify and rectify any data issues promptly. This process involves examining data against predefined quality criteria, identifying discrepancies, and taking necessary actions to address them. Regular audits help in detecting trends, improving data quality over time, and ensuring the effectiveness of your data cleaning techniques.

Implementing these best practices for data cleaning techniques ensures that your data is accurate, reliable, and suitable for analysis and decision-making. By establishing workflows, documenting processes, and conducting regular audits, you can maintain high data quality standards and enhance the overall value of your data.

Conclusion: Harnessing the Power of Data Cleaning Techniques

The Importance of Reliable and Clean Data

Data cleaning techniques play a pivotal role in ensuring the reliability and accuracy of data. By addressing issues such as inaccurate data entry, duplicate records, inconsistent formatting, missing values, and outliers, data cleaning helps establish a solid foundation for data analysis and decision-making. Clean data is essential for obtaining meaningful insights and making informed business decisions.

The Ongoing Process of Data Cleaning

Data cleaning is not a one-time task but an ongoing process. As data evolves and new information is added, it is important to continuously apply data cleaning techniques to maintain data quality. Regular data cleaning ensures that the data remains relevant, up-to-date, and aligned with changing business needs.

Achieving Accurate Insights and Informed Decision-Making through Data Cleaning

Data cleaning techniques enable organizations to extract accurate insights from their data. By eliminating errors, inconsistencies, and redundancies, data cleaning enhances the reliability and trustworthiness of data. This, in turn, empowers decision-makers to make informed choices, identify patterns, detect trends, and uncover valuable insights that can drive business growth and success.

In conclusion, data cleaning techniques are essential for ensuring the reliability, accuracy, and usability of data. By employing effective data cleaning techniques as an ongoing process, organizations can unlock the true potential of their data, derive accurate insights, and make informed decisions that drive success in today’s data-driven world.